Autonomous vehicles are transforming the way we think about transportation. They promise safer roads and more efficient travel. But what makes these vehicles tick?

The journey from Advanced Driver Assistance Systems (ADAS) to full autonomy is fascinating. It involves a complex blend of hardware and software. Understanding this evolution is key to grasping the future of driving.

Vehicle sensors like LiDAR, radar, and cameras play a crucial role. They help the vehicle perceive its surroundings accurately. This perception is vital for safe navigation.

Autonomous systems rely heavily on advanced computing and AI. These technologies process vast amounts of data in real-time. They enable vehicles to make smart decisions on the road.

As we explore this topic, we’ll uncover the hardware behind autonomous vehicles. From ADAS to full autonomy, the road ahead is exciting and full of potential.

The Evolution from ADAS to Full Autonomy

The journey from ADAS to full autonomy is gradual and systematic. ADAS features include lane-keeping assist and adaptive cruise control. These systems are the building blocks of modern autonomous vehicles.

The Society of Automotive Engineers (SAE) defines automation levels from 0 to 5. Level 1 provides minimal driver support, while Level 5 achieves full autonomy. Each level marks progress in autonomy capabilities.

Autonomous systems at higher levels need more complex hardware. This involves integrating multiple sensors and powerful computing platforms. These innovations ensure the vehicle can safely navigate without human input.

Development in hardware reflects advancements in computation and miniaturization. These improvements make autonomous vehicles more reliable and efficient. They enable the processing of data in real-time, crucial for safe driving.

Key phases in the evolution include:

- Level 0 to Level 1-2: Basic automated functions like braking and steering assist.

- Level 3-4: Conditional and high autonomy, allowing some driver disengagement.

- Level 5: Full autonomy, where the vehicle operates independently at all times.

These advancements showcase the roadmap of autonomous technology development.

Key Components of Autonomous Vehicles Hardware

Autonomous vehicles rely on a blend of hardware components. These components are essential for perceiving the environment and making driving decisions. Understanding these elements provides insight into autonomous vehicle capabilities.

Vehicle sensors form the backbone of perception systems. They capture data from the vehicle's surroundings. Such data is vital for the vehicle to understand its environment and operate safely.

Processing power is another crucial element. Modern vehicles use high-performance computing platforms to analyze sensor data. This processing helps in making real-time decisions and executing driving tasks efficiently.

Communication systems also play a vital role. They ensure the vehicle remains connected with infrastructure and other vehicles. This connectivity enhances safety and the vehicle’s ability to respond to dynamic conditions.

Autonomous vehicles require robust power systems. These systems support the energy demands of sensors and computing platforms. Ensuring consistent power supply is essential for reliable vehicle operation.

The key components of autonomous vehicles hardware include:

- Sensors: LiDAR, radar, cameras

- Computing: High-performance processors

- Communication: V2X systems

- Power Systems: Energy management solutions

Understanding these components shows the complexity and synergy needed for autonomous driving technologies.

Vehicle Sensors: LiDAR, Radar, and Cameras

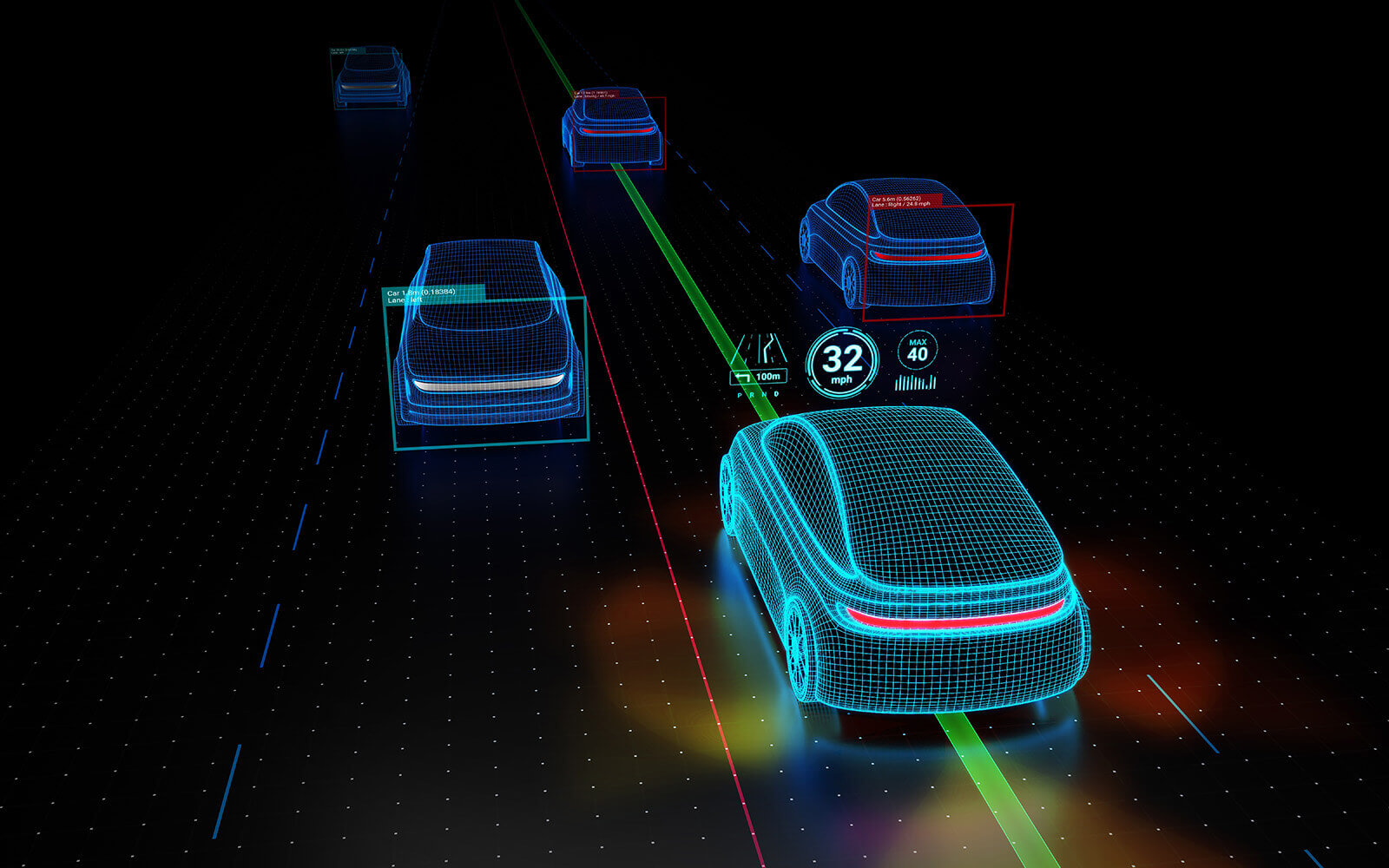

Sensors are at the heart of autonomous systems. They allow vehicles to perceive their environment in remarkable detail. Three main types of sensors are crucial: LiDAR, radar, and cameras.

LiDAR sensors provide high-resolution 3D mapping. They help in detecting obstacles with precision and are vital for navigation. This technology uses laser pulses to measure distances.

Radar systems are key for detecting objects and their speed. They work reliably in various weather conditions. Radars are particularly effective in measuring distances from other vehicles.

Cameras capture visual data, essential for recognizing road signs and lane markings. They offer a wide field of view and detail-rich information. Cameras complement LiDAR and radar by adding visual context to the driving environment.

Each sensor type contributes unique information. Together, they build a comprehensive understanding of the surroundings. This redundancy is crucial for ensuring safety and reliability.

Vehicle sensors ensure the safe navigation of autonomous vehicles by supplying precise and reliable data. They are indispensable in the hardware framework of autonomy.

Sensor Fusion and Data Processing

Sensor fusion combines data from various sensors. This integration creates a more comprehensive understanding of the environment. It’s pivotal for accuracy and reliability in autonomous systems.

The process involves merging inputs from LiDAR, radar, and cameras. Each sensor type excels in different conditions. Combining them mitigates the weaknesses of individual sensors.

Data processing in autonomous vehicles requires immense computing power. Real-time analysis is critical for safe navigation. High-performance platforms process this data rapidly, enabling timely decision-making.

Sensor fusion supports better object detection and environment understanding. This synergy is essential for handling complex driving scenarios. It facilitates smooth operation under diverse conditions.

Benefits of sensor fusion include:

- Improved Accuracy: Enhanced data precision from integrated sources.

- Reliability: Consistent performance across various environments.

- Safety: Better decision-making through comprehensive data analysis.

The efficacy of sensor fusion and data processing is central to the success of autonomous vehicle hardware systems. It exemplifies the integration of cutting-edge technology to achieve sophisticated autonomous driving functions.

High-Performance Computing and AI in Autonomous Systems

High-performance computing is integral to autonomous vehicles. It powers the complex algorithms that interpret sensor data. This computing enables real-time decision-making, crucial for safe driving.

Modern autonomous systems rely on AI to learn from vast datasets. Machine learning algorithms improve vehicle decisions with experience. They refine their actions based on new information over time.

AI models run on advanced hardware, handling numerous inputs at once. These inputs include sensor data, maps, and vehicle states. The processing speed of these systems is vital for timely responses.

The integration of AI in vehicles enhances their adaptability. AI can identify patterns and predict events, improving navigation and safety. This capability is vital for managing the unpredictable nature of real-world driving.

Key benefits of high-performance computing and AI include:

- Efficiency: Optimized routes and reduced fuel consumption.

- Safety: Better obstacle detection and avoidance.

- Adaptability: Enhanced performance in diverse conditions.

In sum, the synergy between high-performance computing and AI transforms autonomous vehicles, offering them intelligence for independent operation. This combination drives innovation, making autonomous systems increasingly reliable and efficient.

Safety, Redundancy, and Cybersecurity in Hardware Design

Safety is paramount in autonomous vehicles hardware design. Every component must operate reliably under various conditions. Malfunctions can lead to serious accidents, so safety measures are critical.

Redundancy is a key strategy for enhancing safety. By duplicating vital systems, autonomous vehicles can remain operational even if a component fails. This redundancy ensures no single point of failure can compromise the vehicle's function.

Cybersecurity is equally crucial in protecting autonomous systems. With vehicles increasingly connected, they are vulnerable to cyber threats. Effective cybersecurity protocols prevent unauthorized access and safeguard sensitive data.

The hardware design for autonomous vehicles includes:

- Multiple backups for essential systems.

- Firewalls and encryption to protect communications.

- Continuous monitoring for potential vulnerabilities.

In summary, the design focuses on building robust systems. By prioritizing safety, redundancy, and cybersecurity, autonomous vehicles can be trusted to operate under diverse and challenging conditions. These measures are vital to gaining public confidence and enabling widespread adoption.

Connectivity: V2X and Edge Computing

Connectivity plays a crucial role in the effectiveness of autonomous vehicles. Vehicle-to-Everything (V2X) communication allows vehicles to interact with the environment.

This interaction extends to other vehicles, infrastructure, and networks, enhancing overall safety and efficiency.

Edge computing processes data locally on the vehicle, reducing latency. This approach enables quicker decision-making, crucial in fast-moving traffic situations. The reduction in data transfer to centralized clouds also helps in maintaining privacy and security.

The integration of V2X and edge computing offers several advantages:

- Real-time communication with traffic signals and other vehicles.

- Decentralized processing that leads to faster responses.

- Improved privacy through localized data handling.

As technology evolves, these connectivity innovations will become standard. They contribute significantly to the autonomy and intelligence of modern vehicles.

Testing, Validation, and Simulation of Autonomous Hardware

The development of autonomous vehicles hardware requires rigorous testing and validation. These processes ensure reliability and safety in real-world scenarios. Simulations play a pivotal role in testing various conditions without physical trials.

Simulations allow for the modeling of complex environments. This helps developers understand how hardware will react to different stimuli. Simulation platforms replicate weather conditions, traffic scenarios, and unexpected obstacles.

The benefits of simulation and validation include:

- Reduced costs by minimizing physical testing.

- Enhanced safety through virtual risk assessments.

- Comprehensive scenario coverage that tests hardware limits.

Through detailed simulation, developers can predict and enhance hardware performance. This capability is vital for achieving full autonomy levels efficiently. As simulations improve, so does the confidence in autonomous systems.

Challenges and Future Trends in Autonomous Vehicles Hardware

Autonomous vehicles hardware faces multiple challenges as the push towards full autonomy continues. One major hurdle is ensuring the safety and reliability of systems in diverse environments. This involves designing hardware that can handle extreme conditions and unexpected obstacles without failure.

Additionally, the cost of integrating sophisticated sensors and computing platforms remains high. Making these technologies affordable while maintaining quality is a significant barrier. Collaboration and innovation are key to overcoming these cost hurdles.

Looking to the future, several trends are becoming apparent. These include:

- Improved sensor technology with greater precision and range.

- Enhanced AI capabilities that make real-time decisions faster.

- Stronger partnerships between tech companies and automakers.

As these trends develop, the capabilities of autonomous vehicles will expand significantly. Staying ahead in this industry requires continual adaptation and research into emerging technologies. The journey towards full autonomy is an evolving narrative driven by ingenuity and determination.

The Road Ahead for Autonomous Vehicles Hardware

As autonomous vehicle technology progresses, hardware will play a pivotal role in achieving full autonomy. Continuous innovations in sensor integration, AI, and connectivity are essential for vehicles to operate safely and efficiently.

The future of autonomous systems hinges on overcoming present-day challenges. Balancing cost with advanced technology and ensuring robust cybersecurity measures are paramount. These aspects will drive the industry's development forward.

Looking ahead, the potential impact of autonomous vehicles is enormous. From safer roads to reduced congestion and increased accessibility, the benefits extend far beyond the vehicles themselves. As engineers and researchers push the boundaries of what's possible, the road ahead promises transformative changes in how we view transportation.

Click Boards™ for Autonomous Vehicle Prototyping: Sense & Drive

Designing autonomous systems starts with accurate sensing and precise control. That’s where our curated set of automotive-focused Click boards™ comes in.

We’ve handpicked twelve Click boards™ ideal for prototyping embedded hardware in ADAS and autonomous vehicles - from inertial and GNSS sensing to motor drivers. These compact, plug-and-play modules accelerate development of key features like localization, motion tracking, and drive control, all within an embedded system environment.

Whether you're building robotic drive systems, driver-assist prototypes, or full vehicle autonomy stacks, these boards help you test quickly and iterate safely - bringing real-world sensing and actuation into your design.

REAL-WORLD AUTONOMOUS PROTOTYPING: CLICK BOARDS™ IN ACTION

Curious how these boards fit into real automotive projects? Explore EmbeddedWiki, our project hub, to see how 6DOF IMU 26 Click, GNSS RTK 5 Click, and Brushless or DC Motor Click boards™ are applied in localization, motion control, and intelligent vehicle behaviors.

These hands-on guides walk you through practical applications - ideal for developers and engineers designing the next generation of autonomous vehicle hardware. Other sensing and control Click boards™ are also featured, giving you a complete platform for rapid, modular development.

It’s all just a click away.

ABOUT MIKROE

MIKROE is committed to changing the embedded electronics industry through the use of time-saving industry-standard hardware and software solutions. With unique concepts like Remote Access, One New Product/Day, Multi-Architectural IDE and most recently, the EmbeddedWiki™ platform with more than million ready-for-use projects, MIKROE combines its dev boards, compilers, smart displays, programmers/debuggers and 1850+ Click peripheral boards to dramatically cut development time. mikroBUS™; mikroSDK™; SiBRAIN™ and DISCON™ are open standards and mikroBUS only has been adopted by over 100 leading microcontroller companies and integrated on their development boards.

Your MIKROE