Artificial intelligence (AI) is revolutionizing the way we interact with technology. It's not just about smart speakers or self-driving cars anymore.

AI is moving closer to the edge.

But what does "artificial intelligence at the edge" mean? It refers to the shift of AI processing from the cloud to local devices, or "edge devices".

These devices range from smartphones to Internet of Things (IoT) gadgets, and even industrial equipment. They're becoming smarter, more autonomous, and more capable of real-time processing.

This shift to the edge brings numerous benefits. It reduces latency, saves bandwidth, and enhances privacy. But it also presents new challenges, especially from a hardware perspective. Edge devices must be powerful enough to handle AI workloads. Yet, they must also be energy-efficient and compact. This balance is not easy to achieve.

In this article, we'll delve into the hardware aspect of AI on edge devices. We'll explore the technologies that make edge AI possible, the challenges faced, and the future trends in this exciting field.

Join us as we unravel the intricacies of machine learning on edge devices from a hardware perspective.

Introduction to Edge AI

Defining AI Edge Computing

Edge AI represents a paradigm shift from traditional computing methods. Instead of relying on remote servers, AI edge computing processes data locally on devices. This approach dramatically reduces the need for continuous cloud connectivity.

AI edge computing allows devices to act on data swiftly and accurately. The power lies in processing right where data is created. This setup empowers devices to make decisions independently.

Understanding the components of edge AI is crucial. Key elements include:

- Edge devices: These range from smartphones to industrial sensors.

- Local processing: AI tasks are executed on-device, reducing the reliance on cloud services.

- Real-time operations: The system handles and responds to data instantaneously.

Traditional AI models need significant computational power, typically from cloud data centers. However, AI edge computing benefits from processing closer to the data source. This ability to compute locally not only boosts efficiency but also enhances data security.

Edge AI leverages specialized hardware to perform complex computations on-site. It balances the need for speed with energy efficiency. This balance is vital as AI becomes increasingly integral in various fields.

Importance of AI on Edge Devices

The move towards AI on edge devices addresses several pressing technological needs. The most immediate benefit is reduced latency. Fast responses are critical in applications like autonomous vehicles and healthcare monitoring.

Bandwidth savings are another advantage. By processing data locally, devices don't need to constantly send information to the cloud. This shift reduces data traffic significantly.

Several reasons why AI on edge devices matters include:

- Enhanced performance: Edge computing reduces delays by keeping processing local.

- Improved privacy: Data processed locally minimizes exposure to potential breaches.

- Cost efficiency: Lower data transfer saves both bandwidth and associated costs.

Beyond these benefits, edge AI enhances data privacy. With data staying closer to its origin, there's less risk of interception during transmission. This feature is crucial for sensitive applications like personal health data.

Moreover, AI on edge devices allows for more personalized experiences. Devices learn and adapt to users' preferences faster, providing tailored solutions almost in real time. This ability to customize and adapt is revolutionizing user interactions across industries.

Hardware Requirements for AI at the Edge

Processing Units

At the core of AI on edge devices are the processing units. These units are responsible for executing AI algorithms with efficiency. Their role is critical in ensuring that devices can handle complex computations swiftly.

Edge devices use various processing units tailored for specific needs. The choice of processor affects both performance and power consumption. Understanding these processors is essential for optimizing edge computing.

There are several types of processors used in edge AI:

- Central Processing Units (CPUs): Known for their versatility.

- Graphics Processing Units (GPUs): Effective for parallel processing tasks.

- Field-Programmable Gate Arrays (FPGAs): Customizable for specific functions.

- Application-Specific Integrated Circuits (ASICs): Designed for specialized tasks.

The right processor depends on the application requirements. CPUs offer broad compatibility, making them suitable for general tasks. However, GPUs excel when handling tasks that involve large datasets and complex calculations. Their architecture is designed to perform massive parallel operations, ideal for machine learning models.

FPGA and ASICs are valuable when specialized processing is needed. They can be tailored to specific AI tasks, providing both efficiency and performance. Their adaptability makes them essential for unique AI implementations on edge devices.

CPU vs. GPU

Choosing between CPUs and GPUs significantly affects how AI tasks are performed on edge devices. Each has its strengths and optimal use cases in edge computing.

CPUs are the traditional workhorses of computing. They handle sequential processing tasks effectively, making them suitable for a wide range of applications. Their general-purpose nature allows flexibility in running diverse workloads.

On the other hand, GPUs are designed for parallel processing. This capability is advantageous for running machine learning algorithms. Training models and carrying out large-scale computations are tasks where GPUs shine.

The decision between CPU and GPU often involves several factors:

- Task complexity: GPUs handle complex, parallel tasks better.

- Energy efficiency: CPUs are often more energy-efficient for less demanding tasks.

- Cost considerations: GPUs tend to be more expensive but provide greater performance for intensive tasks.

In edge AI, the trend is moving towards using both CPUs and GPUs. They complement each other, balancing performance and energy consumption. The integrated use of CPUs and GPUs can lead to more powerful and efficient edge AI systems.

Specialized AI Accelerators

Specialized AI accelerators have become crucial in edge computing. These accelerators are designed to handle AI workloads with unprecedented efficiency and speed. They provide the processing power needed for sophisticated AI tasks on edge devices.

AI accelerators come in different forms, each suited to specific tasks. Their design focuses on optimizing AI computations while keeping power consumption in check. This balance is vital for extending the battery life of edge devices.

Key types of specialized AI accelerators include:

- Digital Signal Processors (DSPs): Used for real-time processing of signals.

- Neuromorphic Processors: Mimic the human brain's neural network.

- Tensor Processing Units (TPUs): Optimize the use of tensors in AI calculations.

DSPs are ideal for processing audio and image data, making them useful in applications requiring real-time data analysis. Neuromorphic processors present a novel approach, replicating the architecture of the human brain to enhance learning capabilities.

TPUs, developed specifically for machine learning tasks, outperform traditional CPUs and GPUs in certain AI operations. They have transformed how neural networks function, increasing processing speed and efficiency.

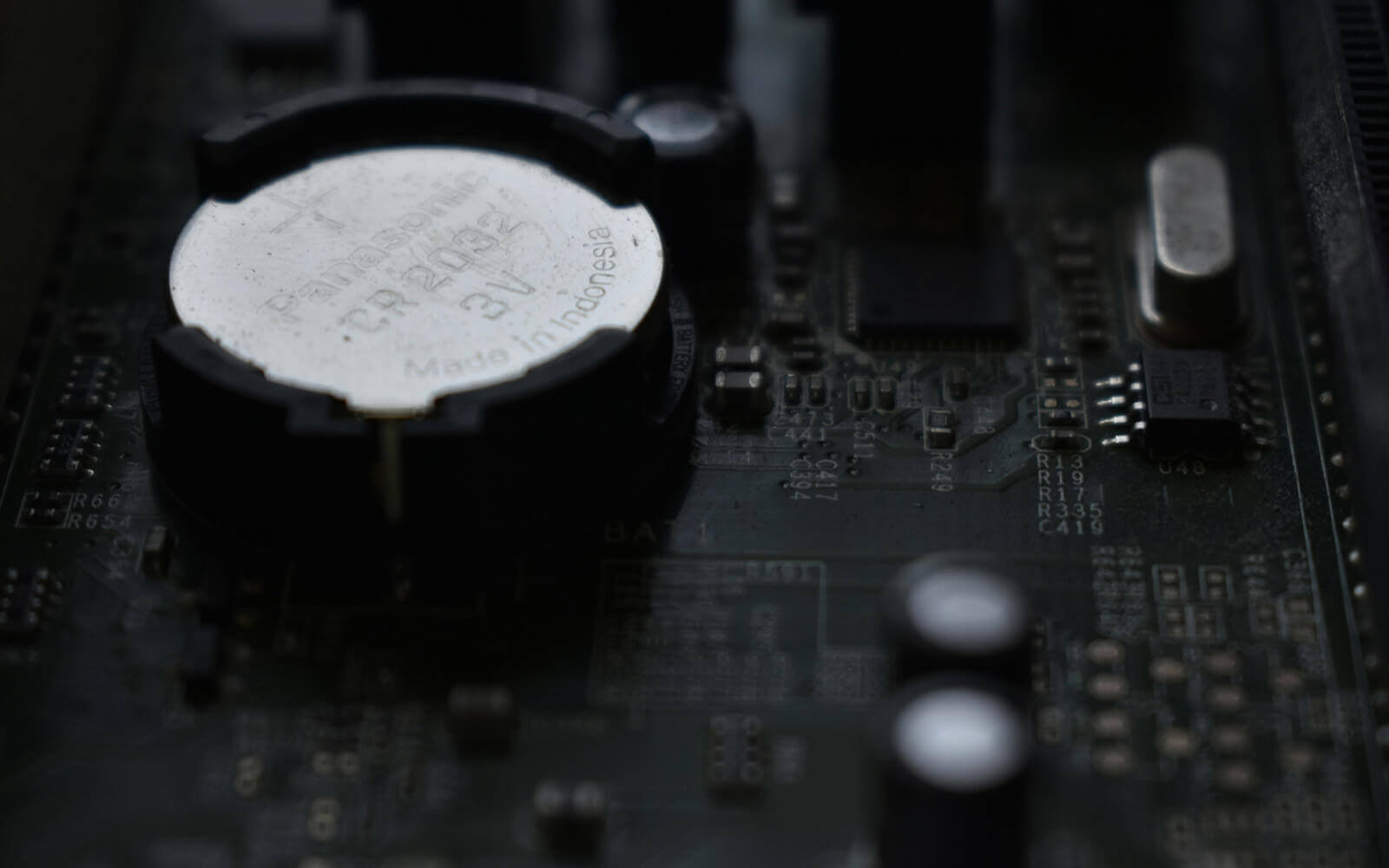

Memory and Storage Considerations

Memory and storage are pivotal in running AI applications on edge devices. They affect data transfer speeds and the overall system responsiveness. Adequate memory ensures that AI models can run efficiently without bottlenecks.

Edge devices face limitations in available memory and storage. These limitations require careful consideration of how data is stored and accessed. Managing memory efficiently is crucial to ensure AI tasks run smoothly and effectively.

Dynamic Random-Access Memory (DRAM) is commonly used in edge devices. It provides the necessary speed for quick data access. However, balancing DRAM usage with energy consumption is essential to maintain device efficiency.

Data storage solutions, like flash memory, offer high-speed data writing and retrieval. This capability is vital for devices continuously collecting and analyzing data. Effective storage management can enhance the performance of AI on edge devices considerably.

Sensor Integration and Compatibility

Sensors play a vital role in edge computing, serving as the bridge between the physical world and digital systems. They collect data that AI systems analyze to make informed decisions. The effectiveness of an edge AI system greatly depends on sensor quality and compatibility.

Integrating sensors with edge devices requires careful planning. Compatibility ensures sensors work harmoniously with the processing units, maximizing data flow. This synergy is necessary to provide accurate, timely information.

Types of sensors commonly used include:

- Environmental sensors: Measure temperature, humidity, and other conditions.

- Motion sensors: Detect movement and orientation.

- Optical sensors: Capture visual data from the surroundings.

Sensor integration also involves ensuring low latency in data transmission. Delays in data processing can affect the performance of AI applications. Real-time data access is crucial for applications like autonomous vehicles and industrial monitoring.

Addressing compatibility involves using standard interfaces and protocols. It ensures different sensors can easily communicate with the processing units. Proper sensor integration enhances the capability of AI systems on edge devices to deliver precise, real-time insights.

Performance Optimization Techniques

Efficient Algorithms for Edge Computing

Efficiency is paramount in edge computing. The algorithms used must handle tasks swiftly, ensuring quick data processing and decision-making. Designing algorithms with edge devices in mind is crucial for optimal performance.

Edge computing environments demand algorithms that consume minimal power and memory. They should capitalize on the available hardware features to maximize processing efficiency. This optimization leads to significant performance improvements.

Efficient algorithms generally focus on:

- Real-time processing capabilities: To ensure minimal latency.

- Low-power consumption: To extend device battery life.

- Scalability: To handle varying data volumes effectively.

To achieve these goals, developers often resort to lightweight models. Such models reduce computational complexity, ensuring rapid processing. Selecting the right algorithm can result in balanced performance, power usage, and speed.

The use of efficient algorithms enhances the reliability of AI edge computing. It allows devices to operate smoothly in diverse conditions. Moreover, the right algorithms help alleviate hardware constraints, providing more robust and responsive systems.

Model Compression and Pruning

Model compression reduces the size of AI models, making them easier to deploy on edge devices. This technique involves reducing unnecessary components without sacrificing accuracy. The goal is to maintain performance while freeing up valuable resources.

Pruning simplifies neural networks by removing redundant nodes. It trims networks without significantly impacting their predictive capabilities. This approach is effective for maintaining model efficacy on limited hardware.

Key strategies in compression and pruning include:

- Quantization: Reduces precision of model weights and biases.

- Weight pruning: Eliminates inconsequential parameters.

- Distillation: Creates a smaller model by training it to mimic a larger one.

Each strategy has its unique application scenarios. Quantization is particularly useful for reducing computation and storage requirements. Pruning targets inefficiencies, ensuring streamlined models that are quick to execute.

Implementing these methods results in smaller, faster models. They become more feasible for real-time applications, enabling better performance on edge devices. Compression and pruning thus play a significant role in advancing AI on the edge.

Energy Consumption Management

Managing energy is a critical aspect of AI edge computing. Devices must operate efficiently to sustain battery life and reduce environmental impact. Minimizing power consumption without compromising performance is a central challenge.

Several approaches help manage energy consumption effectively. Techniques that enhance energy efficiency can prolong device operation and improve user experience. The development of energy-conscious strategies is essential to sustain edge AI functionality.

Effective energy management practices focus on:

- Dynamic voltage scaling: Adjusts power based on workload demands.

- Task scheduling: Optimizes the order and timing of processes.

- Low-power design techniques: Minimizes unnecessary power draw.

These strategies ensure that energy resources are used wisely. Dynamic voltage scaling allows devices to adapt power usage to their current needs, conserving energy. Task scheduling maximizes resource utilization, avoiding redundant energy expenditure.

Designing low-power architectures further supports energy efficiency. Such architectures ensure that devices remain operative longer, even under demanding tasks. This careful balance of energy management is crucial for future-proofing AI edge computing systems.

Use Cases for AI on Edge Devices

Smart Home Applications

Smart home applications are a prominent example of AI on edge devices. By leveraging AI edge computing, these systems offer enhanced convenience and security. Devices like smart thermostats, cameras, and lighting systems operate autonomously, reacting to environmental changes in real-time.

AI in smart homes reduces dependency on cloud services, enabling faster responses and greater privacy. For example, voice assistants can process commands locally, without the need to send data externally. This ensures a more secure and seamless user experience.

Furthermore, AI-driven smart home applications can learn from user behaviors. They adjust settings for optimal comfort and energy savings. Over time, these devices fine-tune their operations to match user preferences, promoting user satisfaction and efficiency.

Incorporating AI on edge devices revolutionizes home management. These applications not only enhance convenience but also elevate efficiency and sustainability. By enabling intelligent automation, smart home technologies represent a significant advancement in everyday living.

Industrial IoT and Automation

In industrial settings, AI edge computing plays a pivotal role. It facilitates the integration of IoT devices, enhancing automation and operational efficiency. These systems enable real-time data processing, crucial for decision-making and process optimization.

For example, AI on edge devices helps in predictive maintenance. Equipment outfitted with sensors can identify potential issues before failures occur. This proactive approach minimizes downtime and extends equipment life, reducing overall maintenance costs.

Edge AI is also instrumental in quality control and monitoring. Automated systems can detect defects during the production process. This ensures consistent product quality and reduces waste, contributing to sustainable manufacturing practices.

Moreover, industrial IoT with edge AI boosts productivity. It allows for autonomous machine operation and intelligent resource management. As a result, businesses can achieve higher efficiency and cost-effectiveness, driving competitive advantage in a rapidly evolving market.

Healthcare Monitoring Systems

The healthcare sector benefits greatly from AI at the edge. AI edge computing enables the development of advanced monitoring systems, improving patient care. These systems provide real-time health data analysis, supporting timely medical interventions.

Wearable devices equipped with AI can continuously track vital signs. They analyze parameters like heart rate, oxygen levels, and activity patterns. This continuous monitoring is pivotal for managing chronic conditions and alerting healthcare providers to emergencies.

AI on edge devices offers patients more autonomy and convenience. Through smart monitoring, patients can receive personalized health insights. This fosters better understanding and management of their health, enhancing overall well-being.

The integration of AI in healthcare brings precision and speed to diagnostics. By processing data locally, latency is reduced and privacy is maintained. Healthcare providers can rely on these insights for more accurate and informed decision-making, ultimately improving patient outcomes.

Challenges in Implementing AI at the Edge

Hardware Limitations

Implementing artificial intelligence at the edge presents several hardware challenges. One primary limitation is the computational power available on edge devices. Unlike cloud-based systems with virtually unlimited resources, edge devices face severe constraints.

These devices need to perform complex computations locally, despite limited hardware capabilities. This demands innovations in processor design to optimize performance without increasing power usage. Developers must strike a balance between computational strength and energy consumption.

Furthermore, edge devices often operate in diverse and demanding environments. They require hardware that is both powerful and resilient. Many edge AI applications must function reliably in conditions with temperature fluctuations, dust, or vibrations.

Addressing these hardware limitations is crucial for the success of AI edge computing. As technology evolves, creating solutions that can handle more demanding tasks efficiently will be key. Innovators continue to explore ways to enhance hardware robustness while maintaining affordability and compactness.

Connectivity Issues

Connectivity remains another significant barrier for AI on edge devices. Many edge applications rely on continuous data exchange with other devices or cloud services. However, connectivity can be unreliable, especially in remote or infrastructure-limited areas.

Poor connectivity can disrupt the real-time operations essential for many edge AI applications. Applications such as autonomous vehicles and industrial automation demand instantaneous data exchange. Network latency or downtime can lead to significant performance issues or safety risks.

To mitigate connectivity challenges, developers employ various strategies. Some edge devices are designed with offline capabilities, allowing them to operate independently of the cloud. This reduces dependency on network availability and lowers bandwidth usage.

Nonetheless, achieving reliable connectivity is still a critical challenge for scaling edge applications. Future advancements in network infrastructure, such as the proliferation of 5G technology, promise to alleviate some connectivity barriers. These improvements could significantly bolster the adoption of AI at the edge.

Security Concerns

Security is a paramount concern for AI edge computing. Edge devices are often more vulnerable to attacks than centralized cloud systems. This is partly due to their widespread distribution and lesser computational resources for security functions.

Sensitive data processed at the edge presents privacy risks. Unlike cloud-based systems that store data in secure data centers, edge devices process data locally. This decentralization makes it harder to implement comprehensive security measures across all devices.

Physical access to edge devices is usually easier compared to cloud infrastructures. This increases the risk of tampering or unauthorized access. Developers must incorporate robust security protocols to protect against such threats.

To enhance security, manufacturers integrate advanced encryption and authentication mechanisms. Some edge AI solutions leverage hardware-based security features, bolstering protection against cyber threats. As the edge computing ecosystem grows, continuous innovation in security technologies and practices is imperative to safeguard sensitive information and maintain user trust.

Future Trends in Edge Device Hardware

Advances in Chip Technology

The evolution of chip technology is shaping the future of AI at the edge. As demand for AI on edge devices grows, chip manufacturers are advancing their offerings. The latest chips are designed for speed, power efficiency, and reliability.

Specialized AI chips, such as ASICs, are becoming more prevalent. These chips provide tailored solutions to meet the specific needs of edge AI applications. With reduced power consumption and increased processing speeds, they optimize performance on edge devices.

Neuromorphic chips are also emerging as a promising development. Inspired by the brain's architecture, these chips excel at parallel processing and reducing energy usage. They hold immense potential for tasks that require real-time data processing at the edge.

Continual advancements in chip technology promise to drive higher efficiency and capabilities in edge devices. As the technology matures, we can expect more intelligent applications where AI thrives even in resource-constrained environments. This will further solidify the role of edge AI in various industries.

Increased Adoption of AI at the Edge

The adoption of AI on edge devices is surging. Companies are increasingly recognizing the benefits of processing data closer to where it’s generated. This approach reduces latency and allows for real-time decision-making.

Industries like healthcare and manufacturing are leading the charge in edge AI applications. Healthcare providers use edge AI for patient monitoring, ensuring timely interventions. Meanwhile, manufacturers leverage AI at the edge for automation and predictive maintenance.

The increasing reach of 5G networks is expected to accelerate this trend. The enhanced connectivity and bandwidth of 5G support more robust and widespread edge AI implementations. This network advance allows devices to handle complex AI tasks efficiently and effectively.

As businesses across sectors realize AI edge computing's potential, its adoption is poised to expand. We are likely to see more innovative solutions that harness edge AI for improved efficiency, accuracy, and customer engagement.

Predictions for Edge Computing Development

Predictions for the development of edge computing point towards transformative changes. The next few years will likely see an increase in the integration of AI with edge computing. This synergy will result in more sophisticated and autonomous systems.

One key area of growth will be in the Internet of Things (IoT). Edge AI will empower IoT devices to process information on-site, reducing the need for cloud dependency. This shift will enhance decision-making processes and improve operational efficiencies.

Additionally, the convergence of edge AI with other technologies is anticipated. For example, combining AI with blockchain could offer secure data transactions on edge devices. Similarly, integrating AI with augmented reality could provide richer, more interactive experiences.

Ultimately, the trajectory for edge computing is towards smarter, more efficient, and secure ecosystems. Innovations will drive developments, making edge computing an integral part of technology infrastructures. Businesses will increasingly rely on edge AI to maintain a competitive edge in a digital-driven world.

Sensor Click Boards™ for Edge AI: Capture, Analyze & Act

Wondering how real-world data powers edge AI? Start with the right Sensor Click boards™.

We’ve curated a range of Sensor Click boards™ ideal for edge AI applications — from motion and environmental sensors to bio-signals and optics. These modular boards make it easy to prototype intelligent systems that detect, process, and respond to data in real time.

Whether you're building a smart monitoring solution, wearable device, or autonomous system, Sensor Click boards™ give your edge device the ability to sense and adapt — directly where the data is generated.

REAL-WORLD PROTOTYPING: SENSOR CLICK BOARDS™ IN ACTION

Want to see Sensor Click boards™ in real-world projects? Visit our project hub on EmbeddedWiki and explore how developers are using sensors to power edge AI — from air quality monitors and activity trackers to predictive maintenance systems.

Whether you’re building your first prototype or enhancing an existing design, these hands-on guides show how to pair Sensor Click boards™ with AI processing — making it easy to go from concept to working edge device, all just a click away.

ABOUT MIKROE

MIKROE is committed to changing the embedded electronics industry through the use of time-saving industry-standard hardware and software solutions. With unique concepts like Remote Access, One New Product/Day, Multi-Architectural IDE and most recently, the EmbeddedWiki™ platform with more than million ready-for-use projects, MIKROE combines its dev boards, compilers, smart displays, programmers/debuggers and 1800+ Click peripheral boards to dramatically cut development time. mikroBUS™; mikroSDK™; SiBRAIN™ and DISCON™ are open standards and mikroBUS only has been adopted by over 100 leading microcontroller companies and integrated on their development boards.

Your MIKROE